Insights

Try It On, Send It Back

Why AR try-ons haven’t solved returns and what might be missing

The Expectation Gap

Augmented reality has promised to transform the way we shop to reduce uncertainty, speed up decision-making, and create more confident connected experiences from the comfort of a home. The initial idea was simple: try on a product virtually, see how it looks, and click purchase with the same ease you’d feel in a store. However, despite widespread adoption, particularly within beauty and fashion, return rates remain high. While the technology continues to improve, the emotional gap between seeing something on a screen and feeling certain about it in person still persists, suggesting that convenience is no longer a problem, but confidence is.

Why It’s Still Not Working

While AR experiences often appear polished and functional, they still lack a level of intuition that reflects real-life decision making. Many tools fall short at visual accuracy without accounting for personal context. For instance, a shopper’s skin tone in different lighting, their sensitivity to texture, or their preferred fits and angles for their exact body type across categories.

What We Thought AR Would Solve

The concept of Virtual try on technology initially seemed to offer a win-win for both sides. Customers would spend less time guessing, brands would enjoy higher conversions and lower return costs, and then the shopping experience would become more seamless, all with just a tap of the screen. From lipsticks to trainers to sunglasses, virtual try-on tools gave people a way to engage with products in a faster, more interactive way than what they had been used to within e-commerce. However, many of these tools have struggled to translate interest into long-term satisfaction. The ability to preview a product doesn’t always mean consumers feel ready to commit to it, suggesting that the current virtual try on technology isn’t enough.

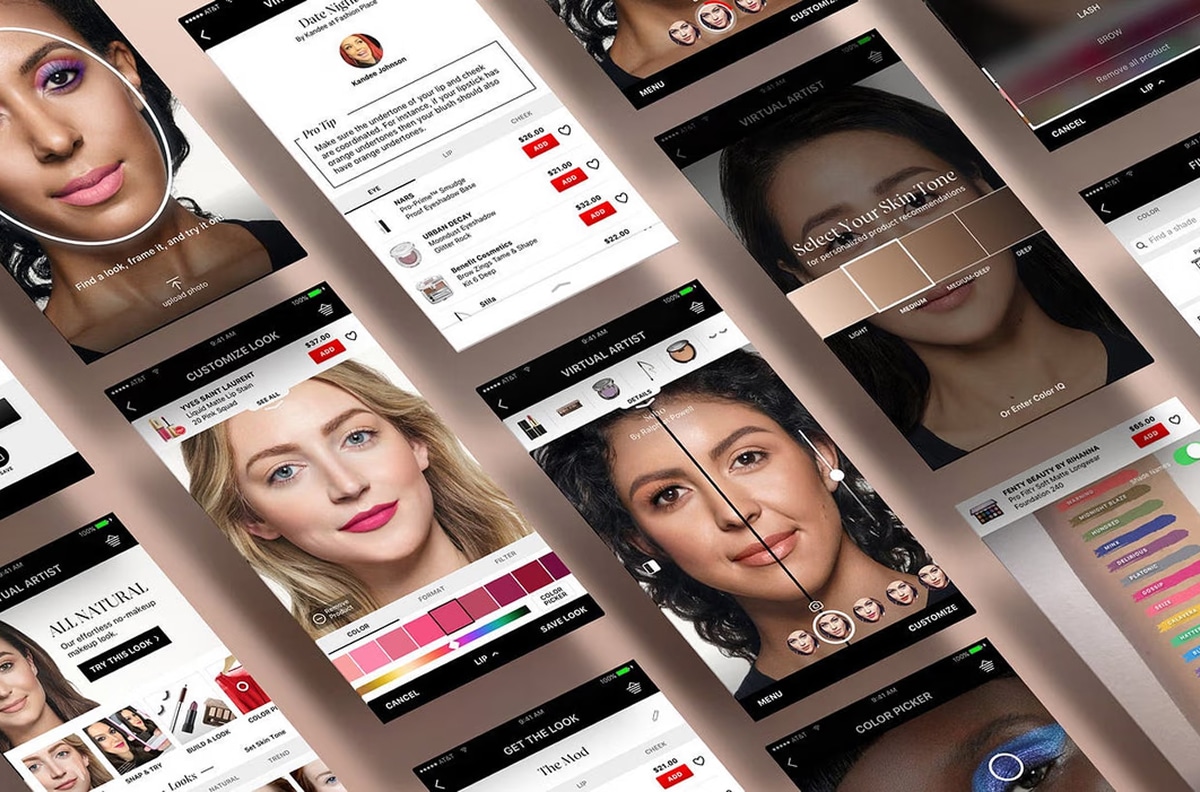

Sephora

There’s also a lack of memory built into the experience such as past purchases, returns, reviews, and even behavioural patterns, making each try-on feel like you’re starting from scratch. This suggests that it’s not that the technology isn’t working, it’s that it hasn’t yet learned to work well enough around a consumer for long term satisfaction.

Could AI Be The Missing Link?

While AR focuses on visualisation, AI has the potential to bring in something more adaptive/intuitive, which is personalisation. The combination of the two could move try-on tools beyond surface-level interaction into something more adaptive and meaningful. Instead of showing what a lipstick might look like on a model, AI could make adjustments in terms of undertones, lighting conditions, keeping in mind previous feedback. Sizing recommendations could evolve from basic charts to predictive insights based on body shape, return behaviour, and previous data from similar customers. Some of this is already underway. A 2023 Shopify report found that 62% of online shoppers were more likely to buy from a brand that offered tailored sizing or product suggestions, while McKinsey reported that brands using AI-driven personalisation correctly could reduce returns by as much as 30%.

While this is not guaranteed, it points to an important shift in expectation. Shoppers no longer want the best product in general but want the best possible product for them.

Some Brands Are Getting Closer

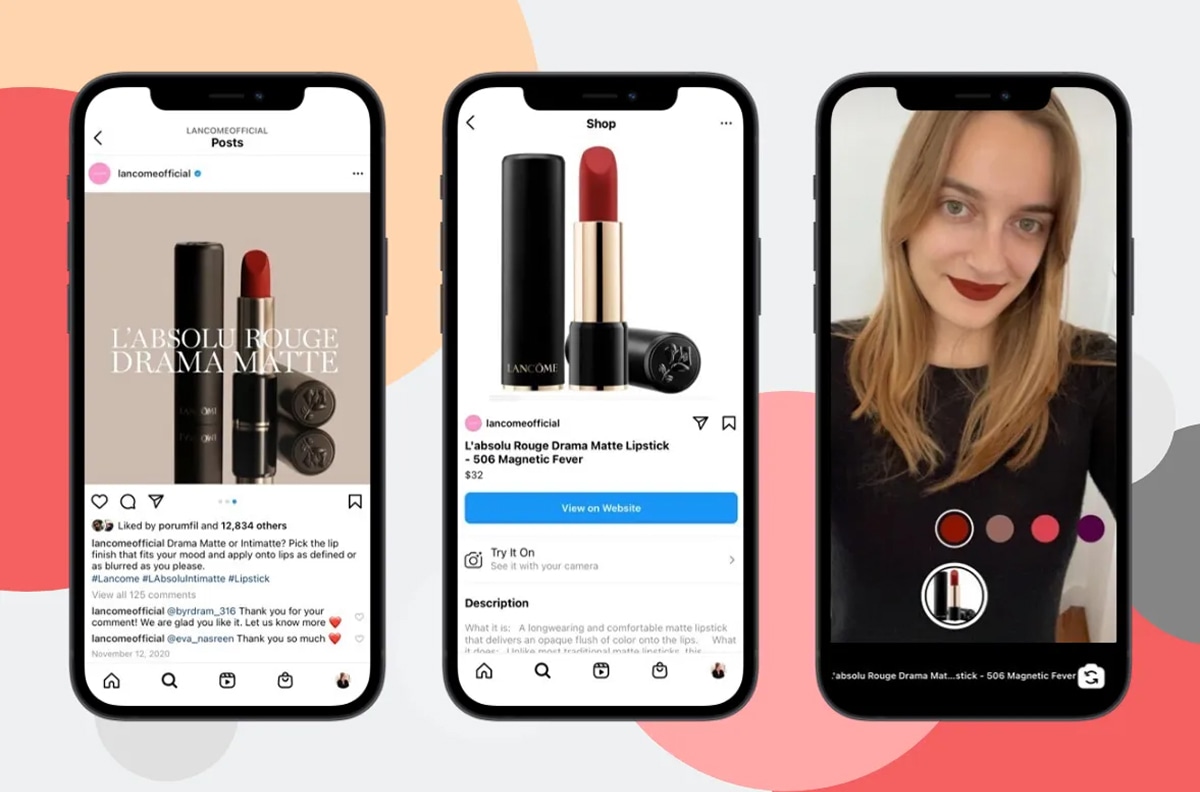

A few brands have started to bridge this gap, combining the immersive nature of AR with the adaptability of data-informed tools. For instance, L’Oréal’s ModiFace allows users to virtually try on makeup in real time, adjusting to skin tone and lighting conditions to create a more realistic experience.

L’Oréal

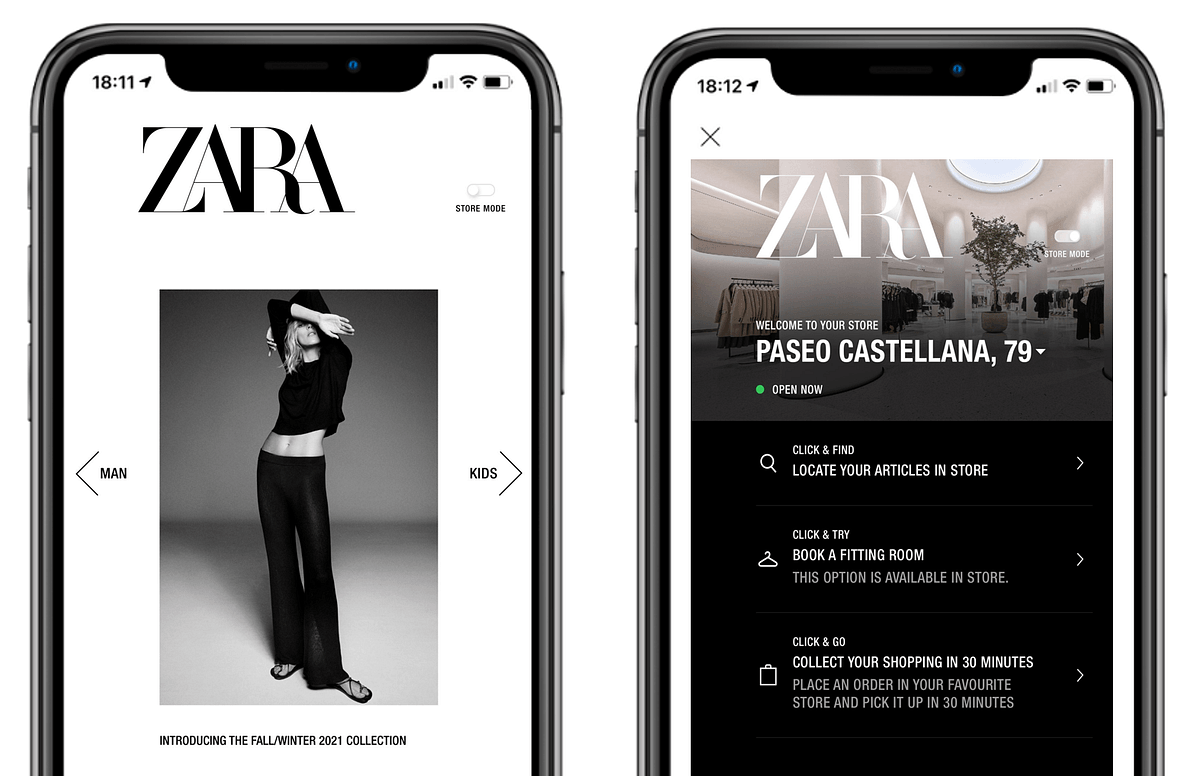

Warby Parker’s app draws from previous styles the consumer has tried, their face shape and saved preferences to guide recommendations. Zara has launched store mode, an in-app feature, showing clothing on a range of AI-generated body types and sizes, helping customers envision how an item might actually look and fit in the real world.

Zara

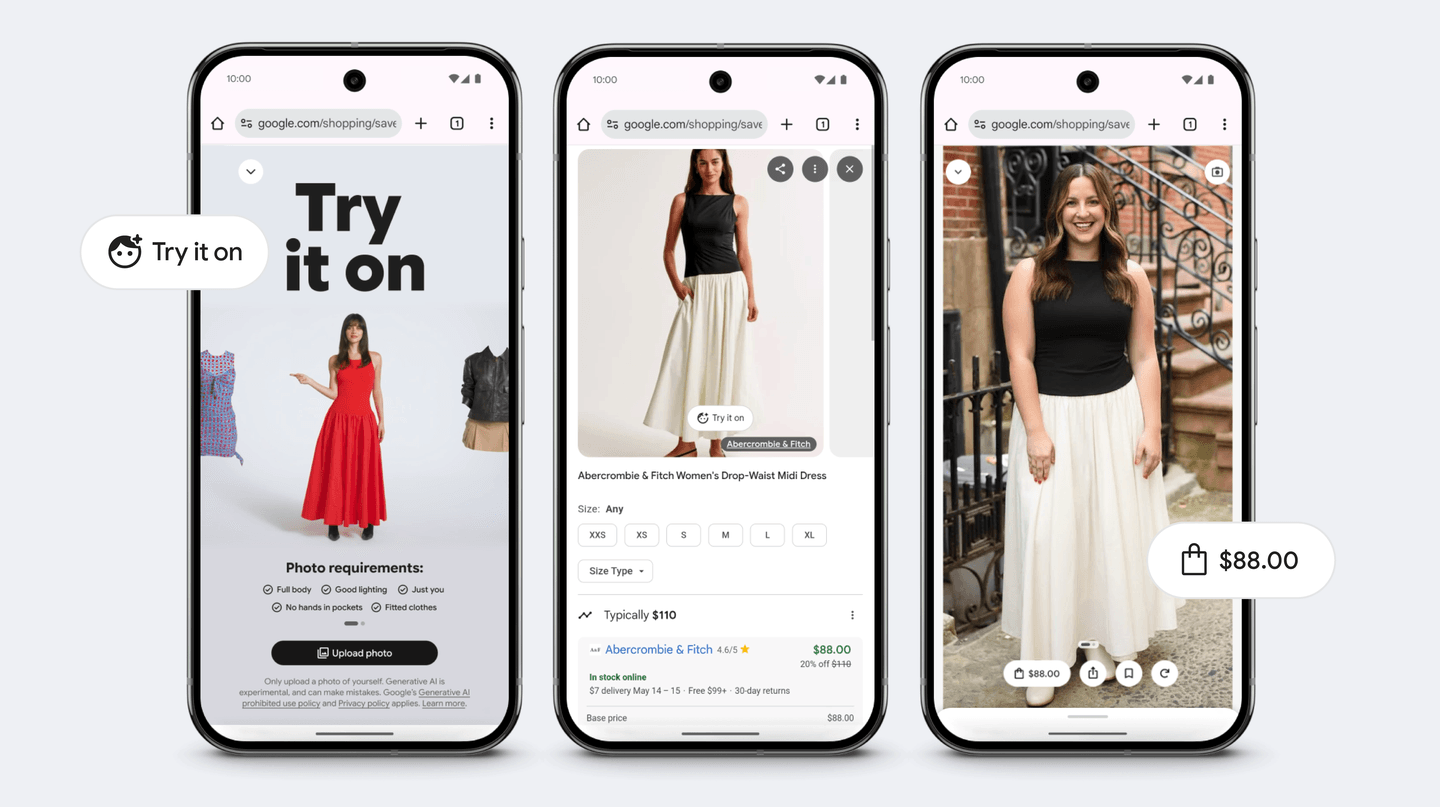

On the other hand, Google takes it one step closer with its updated shopping tools, which allow users to upload a photo of themselves and see how clothing would look on their own body. Using generative AI, it showcases how apparel fits, stretch and move in context not just on a model, but on the consumer. It brings personalisation into sharper focus, making AR visualisation feel truly tailored.

Confidence Isn’t Always Digital

While the tools are becoming more capable, there is still a gap in how they connect with users on a deeper level. Technology can show what a product looks like, but it rarely helps people understand how it feels and for many shoppers, especially Gen Z and millennials, that distinction matters more than ever. For these audiences, value lies in experience rather than efficiency. That includes tools that reflect different body types, lighting conditions, undertones and shopping behaviours, all without requiring users to explain themselves at every step.

As expectations evolve, so does the role of technology. Consumers are not just looking for better visuals, they want to feel more personally understood. This is where AI personalisation might offer the most potential. By learning from past behaviours, recognising patterns and adjusting to individual needs, AI could help shift virtual try-ons from surface-level visuals to something that feels truly responsive. To be effective, these tools will need to do more than offer convenience. They will need to reflect more personalisation, memory and a sense of relevance showing that the brand understands who the shopper is, not just what they look like. Hence, until that becomes reality, consumer satisfaction will still depend on more than what appears on screen.